How to fine tune an open source LLM?

Mistral AI, founded in France in April 2023, is at the forefront of AI specialising in open-source large language models (LLMs). By offering open-source software, Mistral AI provides a powerful alternative to proprietary models. Established by 3 former employees of Meta Platforms and Google DeepMind, the company quickly gained traction, raising $428 million by October 2023 and achieving a quickly a valuation of over $2 billion by December 2023. We are excited to explore the benefits of fine-tuning a pre-trained Mistral 7B model to enhance its benefits understanding of our proprietary documents.

Key Concepts

Mistral has released 2 models, both available as weights, with additional 3 models, Small, Medium, and Large, accessible exclusively via API.

Compared to fine-tuning an open-source LLM, training a large language model (LLM) from scratch is significantly more expensive. For instance, Google’s Gemini Ultra requires an estimated $191 million for training. OpenAI’s GPT-4 incurs an estimated cost of $78 million. Databricks’ DBRX reportedly costs $10 million to train from scratch.

Deep Dive

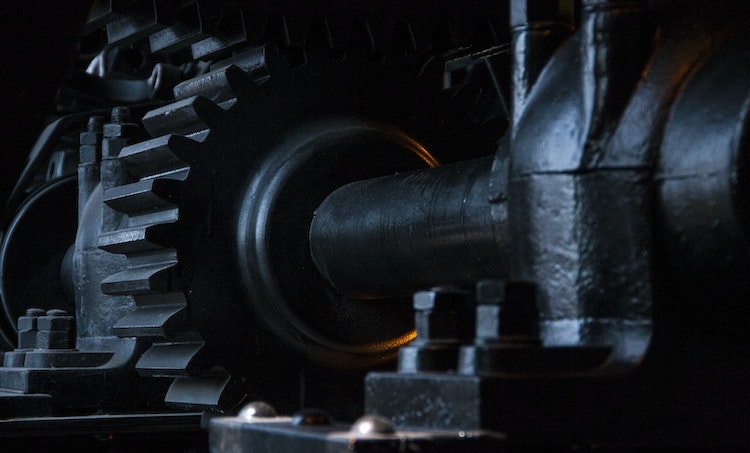

The Mistral 7B is a LLM with 7.3 billion parameters, built using the transformers architecture. Officially released in September 2023 under the Apache 2.0 license, Mistral reports that their model surpasses LLaMA 2 13B on all tested benchmarks and matches LLaMA 34B on many benchmarks. The Mistral 7B employs grouped-query attention (GQA), a variant of the standard attention mechanism that computes attention over groups of hidden states rather than all hidden states. Mistral released 2 versions, a base model and an instruct model. The instruct model has been fine-tuned to respond to chat-style prompts.

Setup & Testing

Before fine-tuning, the base model of Mistral 7B provides responses based solely on general knowledge. The Mistral 7B model offers open-sourced trained weights. We are interested in fine-tuning it with a 181-page contract.

To prepare the training data, we split the contract text into chunks, each with a maximum of 512 characters. During fine-tuning, we monitor the training loss and validation loss, which measure the difference between the model’s predictions and the ground-truth labels in the dataset. At each step, the model learns from a specified number of examples. Our objective is to minimise both the training loss and validation loss.

Hitting the limits

Fine-tuning the model took only four hours in our case, with a cost of approximately 4 USD using an Nvidia A10G GPU on a cloud service. Additional storage costs also apply. We selected a fine tuned model version with low training loss, specifically at step 4500. Although step 525 presented a balance of low training loss and low validation loss, its accuracy was lower than that of step 4500.

We evaluated the fine-tuned model with 11 testing questions. The model answered questions based on the trained document rather than its pre-trained general knowledge. However, the generated answers tended to be verbose and the accuracy was not as good as using Llama 2 7B.

What’s next?

Recently, there has been an increase in the release of open-source LLMs. Meta introduced Llama 3 in April and Databricks released DBRX in May. The performance of these open-source LLMs continues to improve, as evidenced by their standings on the LMSYS Chatbot Arena leaderboard.

What’s the verdict?

Fine-tuning an open-source LLM with proprietary documents offers numerous opportunities in the era of generative AI. This approach is both cost-effective and time- efficient. A fine tuned LLM can perform various language tasks at a lower cost, reduce dependence on online LLM services, and enhance data security