Does Gemini Improve Accuracy For Contract Analysis?

One of our standard evaluation tests for LLMs is to assess their ability to interpret large construction contracts and answer questions to the level of a lawyer performing a commercial assessment. Google released Gemini 1.5 Pro LLM in February, with a breakthrough feature of its large context window, allowing input of around 1400 pages of text in the prompt. We wanted to assess whether the large context window removes the need to preprocess data while maintaining or improving accuracy.

Key Concepts

We performed two core tests. The first was to perform the legal evaluation with the questions as scripted for the human process. This includes questions such as “Are the warranties appropriate?” or “Is there an appropriate variations regime?” which require both language understanding as well as commercial judgement.

The second is to perform prompt engineering on those questions to give extra direction to the LLM in how to interpret the data.

Deep Dive

Gemini 1.5 Pro is now accessible via Google Al Studio, with its API released on 10th April. The service is priced at 7 USD per million input tokens and 21 USD per million output tokens. In our experiment, a 242-page commercial contract, comprising 137,277 tokens, will cost approximately 1 USD per checklist question.

We leveraged Google Al Studio to upload contract documents, ask checklist questions, and review the responses.

Setup & Testing

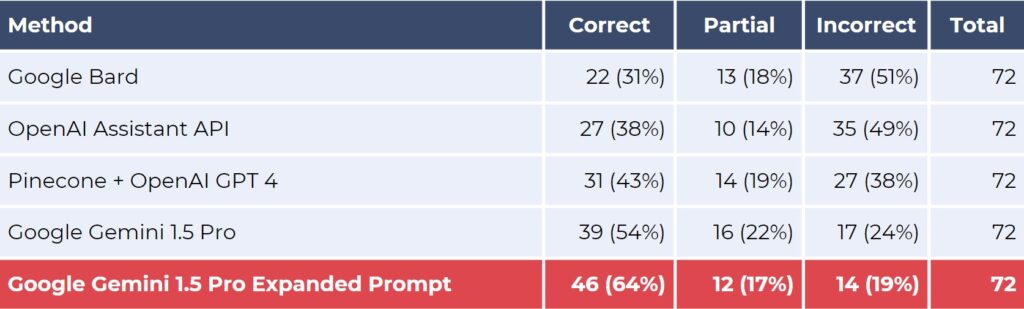

The main evaluation focussed on assessing the response accuracy using the set of 72 questions. We compared its performance with that of earlier iterations, including retrieval augmented generation (RAG) using Pinecone and OpenAI GPT- 4, OpenAl Assistant, and Google Bard.

Additionally, the experiment also involved breaking down checklist questions into step-by-step prompts to assess whether this approach enhances accuracy. The aim is to refine the precision of Al responses in complex query contexts.

Hitting The Limits

Google Gemini 1.5 Pro outperformed other LLMs such as Google Bard, OpenAl Assistant API, RAG using Pinecone and OpenAI GPT-4 in terms of accuracy. Through the extended prompts, the accuracy of Gemini 1.5 Pro has improved to 64%, while concurrently achieving a reduction in the error rate to 19%. This demonstrates the capability of Gemini 1.5 Pro in understanding and processing legal documents and queries.

What’s Next?

We will continue to evaluate LLMs using this benchmark. We will extend the prompt engineering work to seek better performance using Gemini. We will train our own model based on an open-source foundation and assess its performance.

What’s the verdict?

As generative Al evolves rapidly, we expect platforms such as Google Gemini and OpenAI GPT, to achieve better accuracy in analysing contracts and complex documents. This advancement will make generative Al a pivotal tool for enhancing productivity across a wide range of fields.