We’re all aware that digital discrimination has become a serious issue as more and more decisions are being made by artificial intelligence-based systems.

Take text-to-image generation models from businesses like DALL-E, Midjourney, and Stable Diffusion as examples. They are among the most innovative and advanced technologies to emerge from the field of AI in the past year.

But even the most cutting-edge technology isn’t immune from bias, as we’ve discovered with practically every single AI and machine learning advancement in recent memory, and these AI image producers are no exception.

AI systems are only as unbiased and fair as the data they are trained on. Unfortunately, a lot of the datasets that are used to train AI systems are flawed, biased, or discriminatory, reflecting the underlying preconceptions and biases of the people who generated them.

As a result, biases and discrimination may be perpetuated and even amplified by AI systems, harming both individuals and communities.

Credit scores, insurance payouts, and health assessments are just a few of the phenomena that might be affected by automated choices. These automation techniques can become an issue when they systematically disfavour particular individuals or groups.

Digital discrimination is pervasive in a wide range of industries, including credit scores and risk assessment tools used by law enforcement.

So, how can we address these discriminations?

Understanding the root causes of discrimination and bias in AI systems is the first step towards finding solutions.

Data selection bias, data labelling bias, and algorithmic bias are some of the causes of AI bias.

Data selection bias, for instance, can happen when training data favour one group or trait over another, biasing an AI system to favour that group.

Biased data labelling can result in a biased AI model, which can happen when human annotators who label data for training AI models are biased or employ arbitrary standards.

Algorithmic bias can happen when AI systems’ algorithms reinforce or exacerbate prejudices already present in the data.

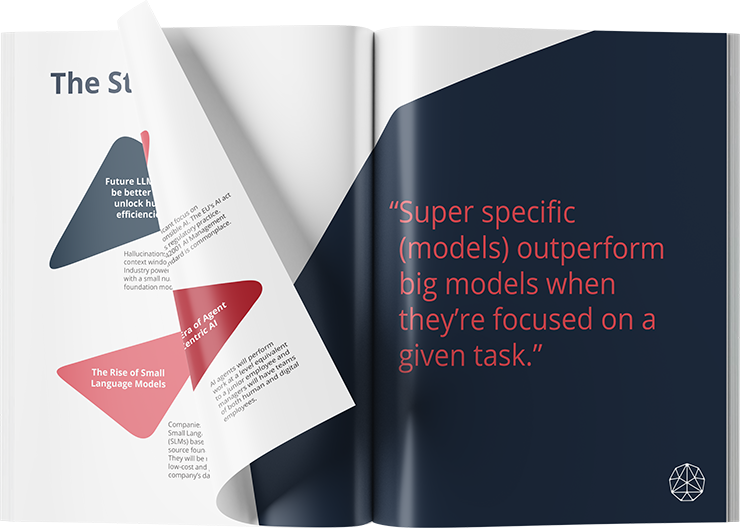

After understanding the root causes of prejudice and discrimination in AI systems, it is crucial to create AI models that are reliable, moral, and just. This can involve using varied and representative datasets, building algorithms that are transparent and easy to interpret, and constantly auditing and testing AI systems for prejudice and discrimination.

Building safeguards like supervision systems and decision-making frameworks is also necessary to guarantee that AI technologies are used morally and responsibly.

Establishing governance and oversight frameworks that support accountability and transparency in AI systems is also crucial.

And for this reason, Red Marble AI has introduced a new software platform to give you greater visibility of your AI program and make it easier for you to design process checks and guardrails around it.

Contact us if you’d like to learn more.